Root Cause Analysis

Root Cause Analysis

Root cause analysis is the identification of the root cause of a defect.

Basic root cause analysis can often be extremely illuminating if 50% of your software defects are

directly attributable to poor requirements then you know you need to fix your requirements

specification process. On the other hand if all you know is that your customer is unhappy with the

quality of the product then you will have to do a lot of digging to uncover the answer.

To perform root cause analysis you need to be able capture the root cause of each defect. This is

normally done by providing a field in the defect tracking system in which can be used to classify

the cause of each defect (who decides what the root cause is could be a point of contention!).

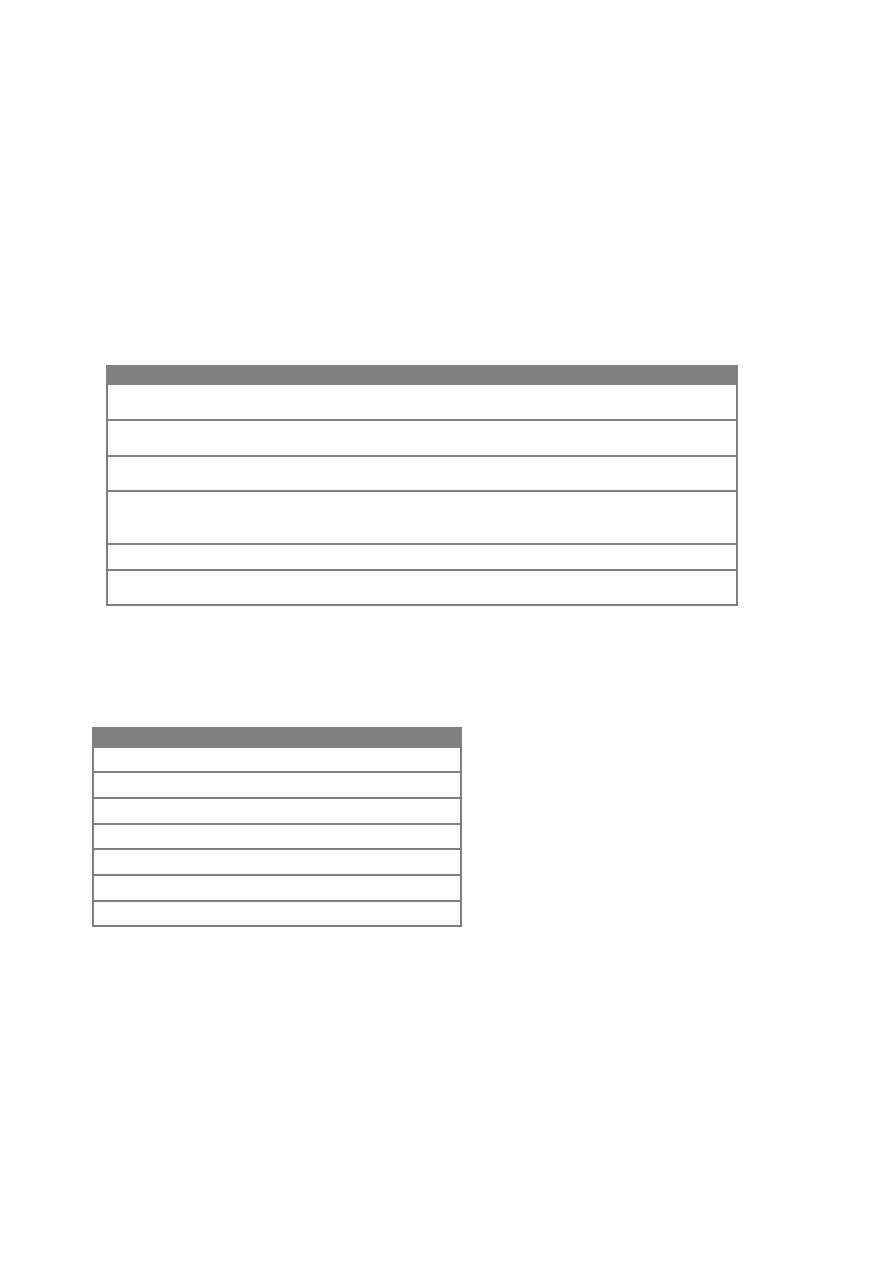

A typical list might look like this :

Classification

Description

Requirements

The defect was caused by an incomplete or ambiguous requirement with

the resulting assumption differing from the intended outcome

Design Error

The design differs from the stated requirements or is ambiguous or

incomplete resulting in assumptions

Code Error

The code differs from the documented design or requirements or there

was a syntactic or structural error introduced during coding.

Test Error

The test as designed was incorrect (deviating from stated requirements or

design) or was executed incorrectly or the resultant output was incorrectly

interpreted by the tester, resulting in a defect "logged in error".

Configuration

The defect was caused by incorrectly configured environment or data

Existing bug

The defect is existing behaviour in the current software (this does not

determine whether or not it is fixed)

Sub-classifications are also possible, depending on the level of detail you wish to go to. For

example, what kind of requirement errors are occurring? Are requirements changing during

development? Are they incomplete? Are they incorrect?

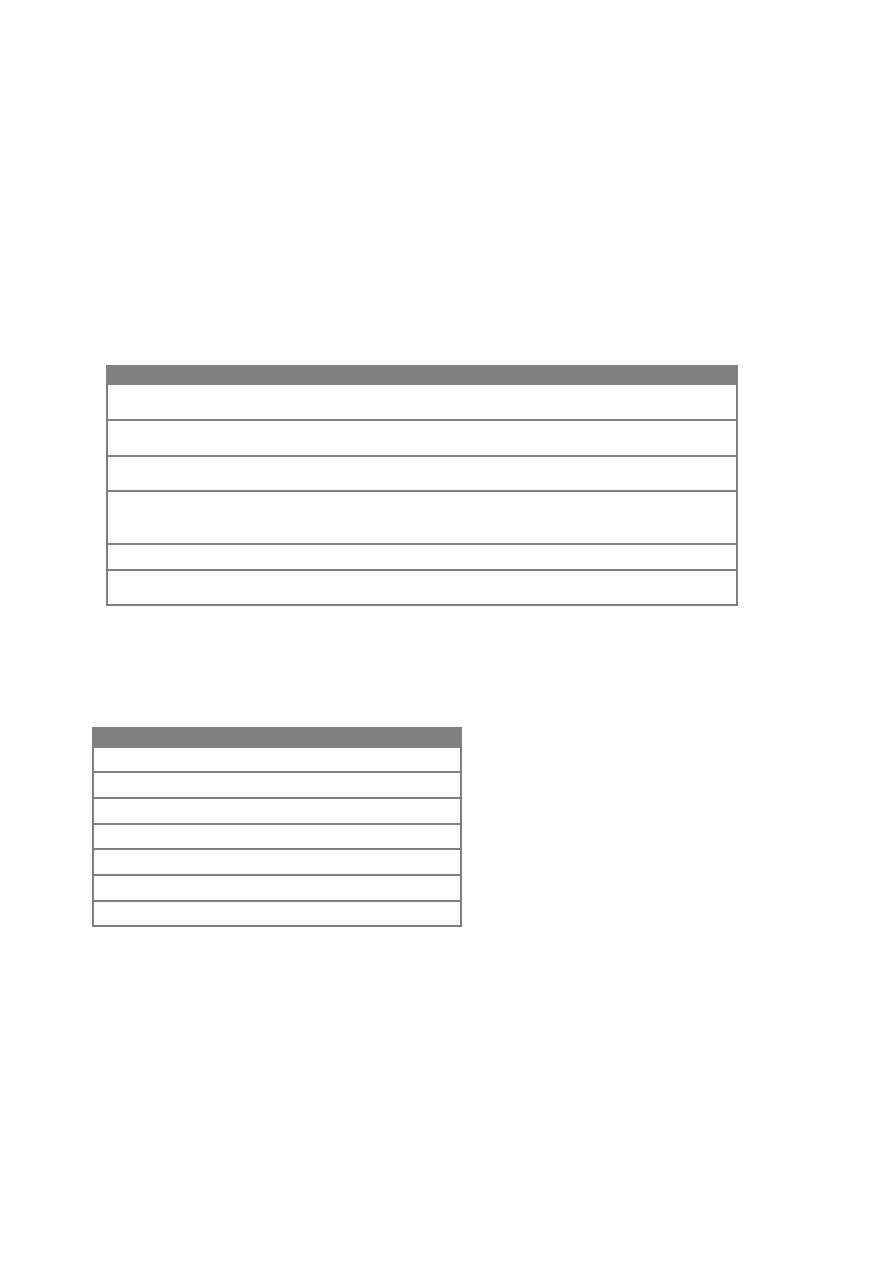

Once you have this data you can quantify the proportion of defects attributable to each cause.

In this table, 32% of defects are attributable to

mistakes by the test team, a huge proportion.

While there are obviously issues with

requirements, coding and configuration, the

large number of test errors means there are

major issues with the accuracy of testing.

While most of these defects will be rejected

and closed, a substantial amount of time will

be spent diagnosing and debating them.

This table can be further broken down by other defect attributes such as "status" and "severity".

You might find for example that "high" severity defects are attributable to code errors but "low"

severity defects are configuration related.

A more complete analysis can be conducted by identifying the root cause (as above) and how the

defect was identified. This can either be a classification of the phase in which the defect is

identified (design, unit test, system test etc.) or a more detailed analysis of the technique used to

discover the defect (walkthrough, code inspection, automated test, etc.) This then gives you an

overall picture of the cause of the defect and how it is detected which helps you determine which

of your defect removal strategies are most effective.

35

Classification

Count

%

Requirements

12

21%

Design Error

5

9%

Code Error

9

16%

Test Error

18

32%

Configuration

9

16%

Existing bug

3

5%

TOTAL

56

100%

Figure 14: Sample root cause analysis