|

|

A Metric Leading to Agility

By: Ron Jeffries

Nearly every metric can be perverted, since up- and down-ticks in the metric can come from good or bad causes. Teams driven by metrics often game the metrics rather than deliver useful software. Ask the team to deliver and measure Running Tested Features, week in and week out, over the course of the entire project. Keeping this single metric looking good demands that a team become both agile and productive.

Can Metrics Lead to Agility?1

Camille Bell, on the Agile Project Management mailing list, asked about using metrics to produce agility. In the ensuing discussion, I offered this:

What is the Point of the Project?

I'm just guessing, but I think the point of most software development projects is software that works, and that has the most features possible per dollar of investment. I call that notion Running Tested [Features], and in fact it can be measured, to a degree.

Imagine the following definition of RTF:

1. The desired software is broken down into named features (requirements, stories) which are part of what it means to deliver the desired system.

2. For each named feature, there are one or more automated acceptance tests which, when they work, will show that the feature in question is implemented.

3. The RTF metric shows, at every moment in the project, how many features are passing all their acceptance tests.

How many customer-defined features are known, through independently-defined testing, to be working? Now there's a metric I could live with.

What Should the RTF Curve Look Like?

With any metric,we need to be clear about what the curve should look like. Is the metric defects? We want them to go down. Is it lines of code? Then, presumably, we want them to go up. Number of tests? Up.

RTF should increase essentially linearly from day one through to the end of the project. To accomplish that, the team will have to learn to become agile. From day one, until the project is finished, we want to see smooth, consistent growth in the number of Running, Tested Features.

* Running means that the features are shipped in a single integrated product.

* Tested means that the features are continuously passing tests provided by the requirements givers -- the customers in XP parlance.

* Features? We mean real end-user features, pieces of the customer-given requirements, not techno-features like "Install the Database" or "Get Web Server Running".

Running Tested Features should start showing up in the first week or two of the project, and should be produced in a steady stream from that day forward. Wide swings in the number of features produced (typically downward) are reason to look further into the project to see what is wrong.

But our purpose here is to provide a metric which, if the team can produce to the graph, will induce them to become agile. A team producing Running Tested Features in a straight line from day zero to day N will have to become agile to do it. They can't do big design up front -- if they do, they'll ship no features. They can't skip acceptance testing -- if they do, their Features aren't Tested. They can't skip refactoring -- if they do, their curve will drop. And so on.

It might be possible to game RTF. My guess, however, is that it would be easier just to become agile. And the thing is, it would take a truly evil team to respond to demands for features with lies. And I don't think there are many evil teams out there.

The reason that XP and Scrum and Crystal Clear work is that they demand the regular delivery of real software. That's what RTF is about.

What Should RTF NOT Look Like?

The RTF curve isn't right if it starts flat for a few months. RTF metrics are right when features start coming out right away. BDUF projects and projects that focus on infrastructure first need not apply.

RTF isn't right if it starts fast with features and then slows down. Projects that don't refactor need not apply.

RTF isn't right unless it starts at day one of the project and delivers a consistent number of features right along.

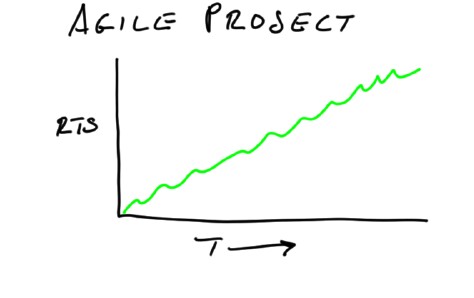

Let's look at how this metric might look on an agile project, and in comparison, a waterfall project.

Agile Projects:

Early Features

An Agile project really does focus on delivering completed features from the beginning. Assuming that the features are tested as you go, the RTF metric for an agile project could look like this:

This is a very simple metric, often called the "burn-up" chart. Real live features, done, tested, delivered consistently from the very beginning of the project to the very end. Demand this from any project, and they must become agile in response to the demand.

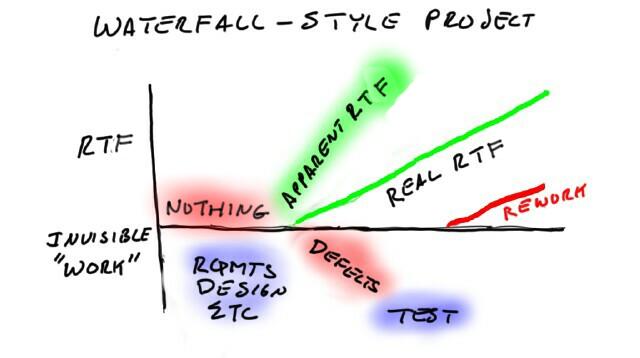

Waterfall: Features Later On

Waterfall-style projects "deliver" things other than RTF early on. They deliver analysis documents, requirements documents, design documents, and the like, for a long time before they start delivering features. The theory is that when they start delivering features, it will be the right features, done right, because they have the right requirements and the right design. Then they code, which might be considered Delivered Software, but not Running Tested Features, because the features aren't tested, and often don't really run at all. Finally they test, which mostly discovers that the RTF progress wasn't what they thought. It looks like this:

Look at all the fuzzy stuff on this picture. There's blue fuzzy unpredictable overhead in requirements and design and testing. We can time-box these, and often do, but we don't know how much work is really done, or how good it is.

And the apparent feature progress itself may also be fuzzy, if the project is planning to do post-hoc testing. We think that we have features done, but there is some fuzzy amount of defect generation going on. After a bout of testing -- itself vaguely defined -- we get a solid defect list, and do some rework. Only then do we really know what the RTF curve looked like.

The bottom line is that a non-agile project simply cannot produce meaningful, consistent RTF metrics from day one until the end. The result, if we demand them, is that a non-agile project will look bad. Everyone will know it, and will push back against the metric. If we hold firm, they'll have no choice but to become more agile, so as to produce decent RTF.

Ron, You're Being Unfair!

One might argue that I'm being unfair in drawing these pictures this way. Surely there are hidden and vague things going on in an Agile project as well. And surely there are ways to measure requirements quality, design quality. There might even be ways to predict testing and to estimate the number of defects. And surely, it can't all be that simple!

Well, as Judge Roy Bean said, if you wanted fair you should have gone somewhere else. My job here is to shoot waterfall in the back. But in all honesty, that's the fate non-agile processes deserve. The waterfall chart shows graphically why: the things we do in non-agile style are difficult to measure, and do not measure what we really care about.

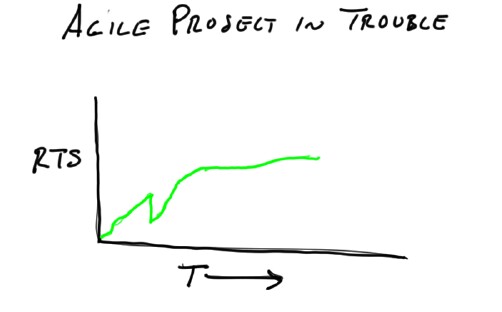

The metric I'm calling here "RTF", Running Tested Features, can be made as concrete as we wish. All we have to do is to define our feature stories in terms of concrete, independent, acceptance tests, which must pass. The RTF metric is very hard to game, and it will show just what's happening on the project. As an example, let's look at another project's RTF curve:

What's going on here? Early on, we see a sudden drop in RTF. What could that indicate? We don't know, but we know it probably isn't good. Perhaps the programmers have suddenly made a change that breaks a bunch of tests. We better hunt them down and hold their feet to the fire. But wait: maybe the requirements have changed, and the tests have been changed to reflect the new requirements, and the software has taken a setback. What we know is that there's something to learn, but as yet we don't know what. What should we do? Ask the team!

Then, later in the project, progress flattens out. Again, clearly trouble. But what is the cause? It might be that the team isn't doing decent refactoring to keep the design clean, and they have slowed down. It might be that we've taken all the programmers off the project. It might be that the customer has stopped defining requirements, or stopped writing tests. Again, we don't know. Ask the team!

How does RTF Cause Agility?

* RTF requires feature count to grow from day one. Therefore the team must focus on features, not design or infrastructure.

* RTF requires feature count to grow continuously. Therefore the team must integrate early and often.

* RTF requires features to be tested by independent tests. Therefore the team has comprehensive contact with a customer.

* RTF requires that tests continue to pass. Therefore the tests will be automated.

* RTF requires that the curve grow smoothly. Therefore the design will need to be kept clean.

* With RTF, more features is better. Therefore, the team will learn how to deliver features in a continuous, improving stream. They'll have to invent agility to do it.

The agile methods Scrum and Crystal Clear implement RTF. "All" they ask is that the team ship ready-to-go software every iteration. Everything else follows. XP gives a leg up, in that you get a free list of things that will help.

RTF Demands Agility

If an organization decides to measure project progress by Running Tested Features, demanding continuous delivery of real features, verified to work using independent acceptance tests, the RTF metric, all by itself, will tell management almost everything it needs to know about what's going on. More important, since this metric is really difficult to game, the metric itself will cause the team to manage itself to do what management really wants.

Becoming more agile isn't easy. It requires that the whole organization, and particularly the software team, commit itself to learning a new way of working. Agility is demanded by the metric. The metric just requires that the team deliver Running Tested Features. And that, after all, is the real point of software development.

Other Resource

... to read more articles, visit http://sqa.fyicenter.com/art/

|